EmotionEcho.exe

Skylar (Siqi) Zhang

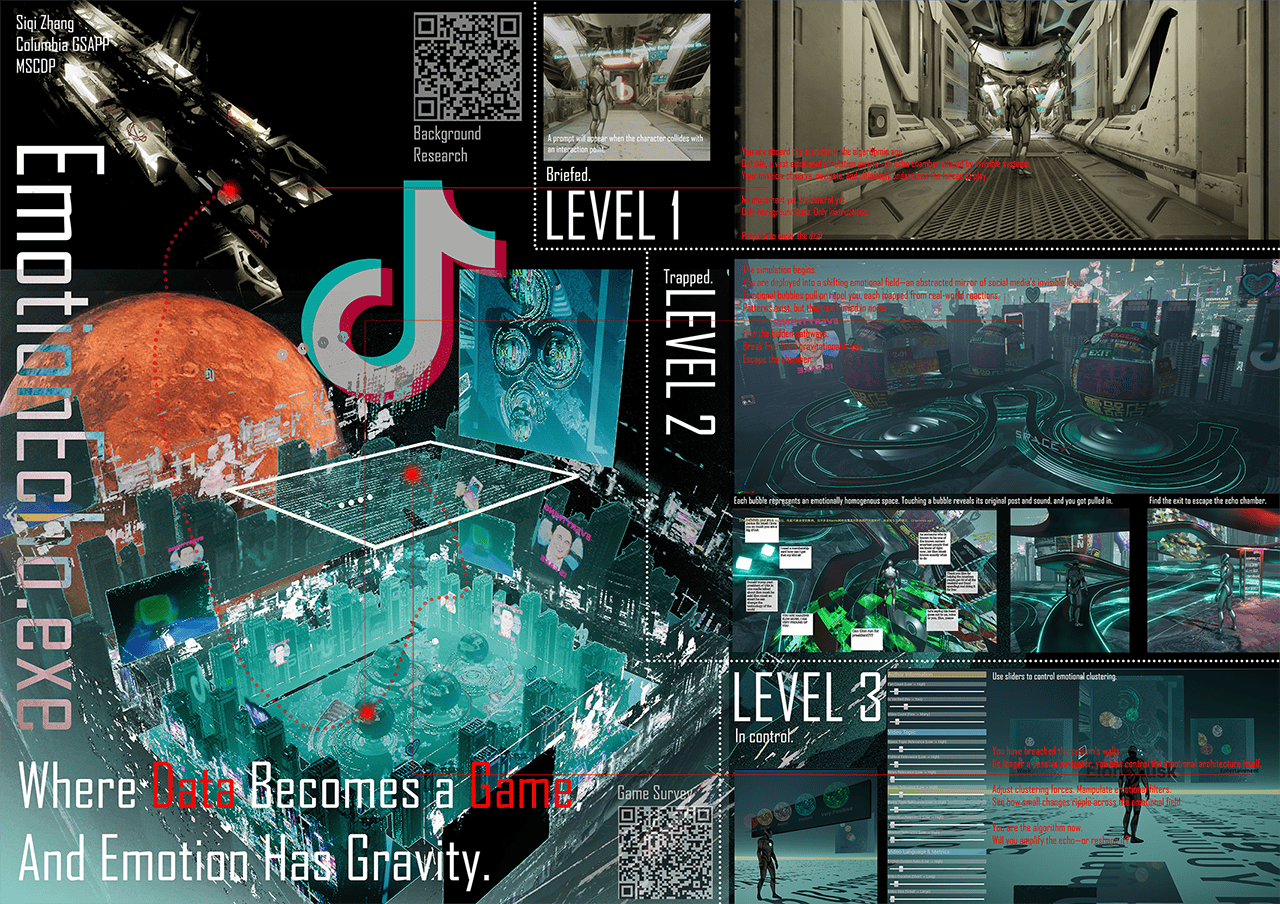

EmotionEcho.exe is a three-stage interactive experience that visualizes how emotional landscapes are shaped and echoed by algorithmic systems like TikTok.

"Have you ever clicked on one video... and suddenly, your feed was filled with the same kind of content, over and over again? At first, it feels natural—maybe even comforting. But slowly, it becomes harder to remember what existed outside that bubble. The space narrows. The emotions loop. Until you're not just watching the content—you're living inside it."

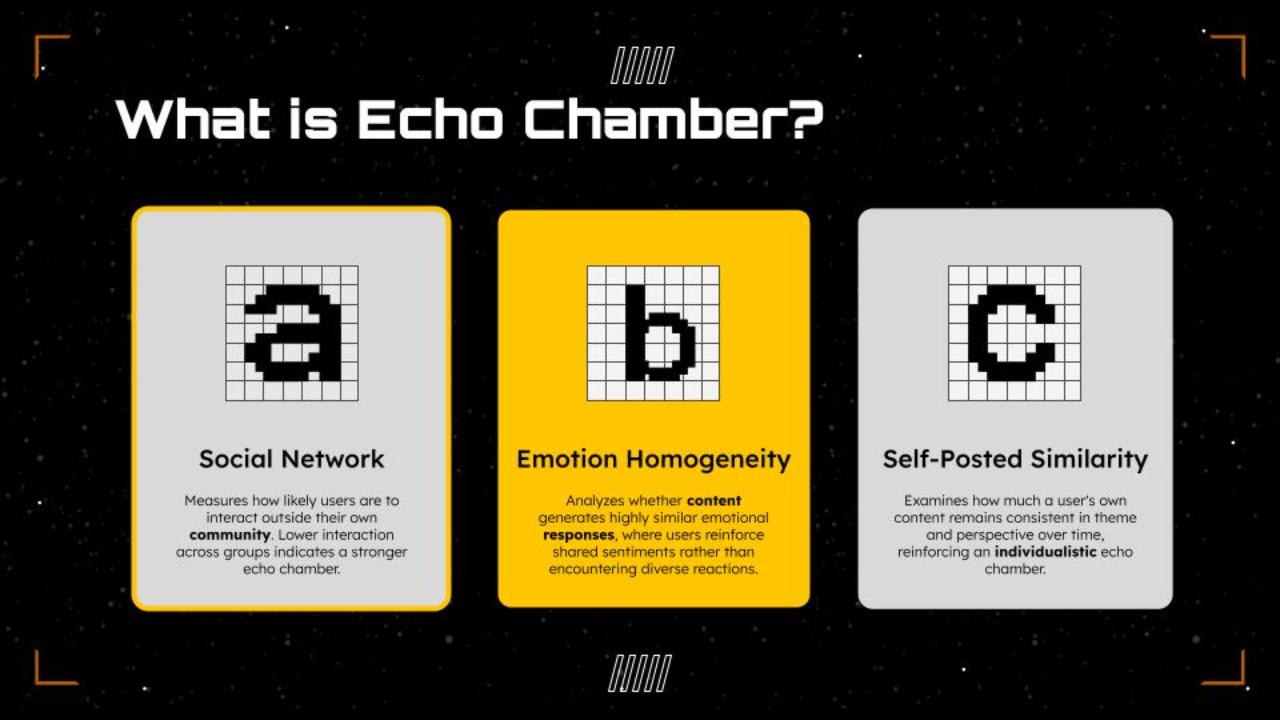

What is Echo Chamber?

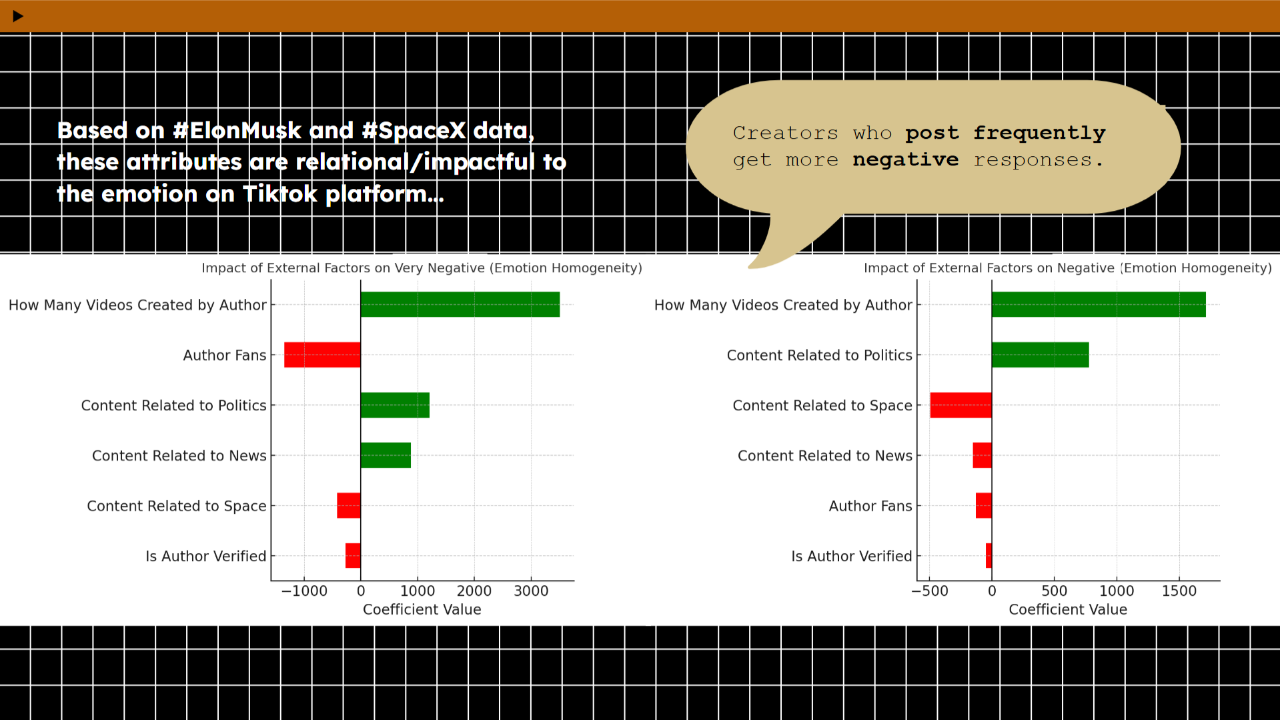

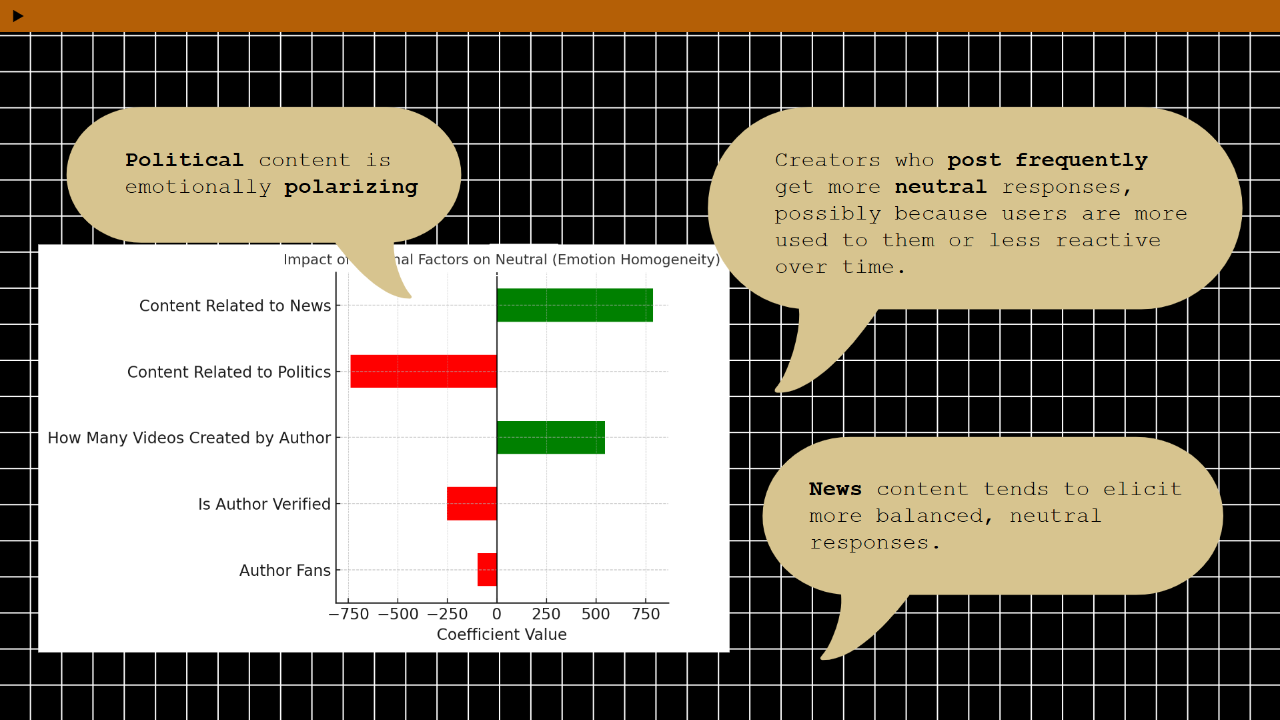

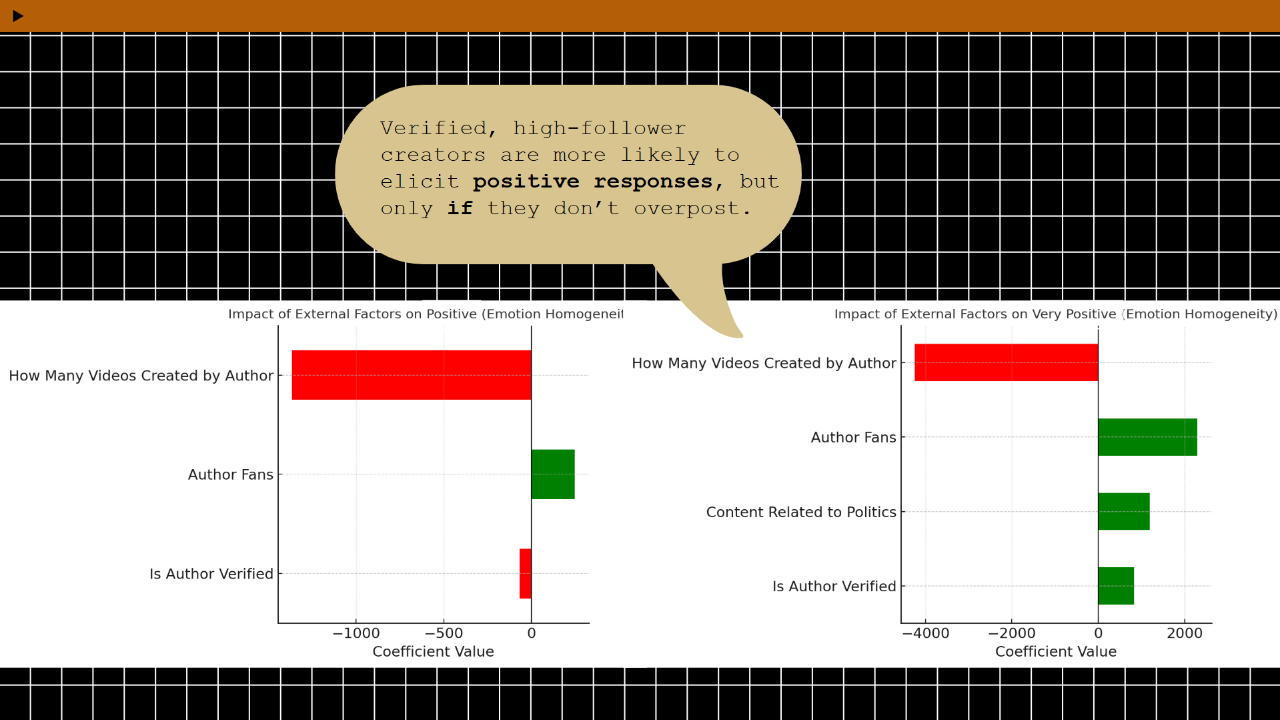

Based on existing research, echo chambers can be understood through three dimensions: Social Network, Emotion Homogeneity, and Self-Posted Similarity. Each represents a different way that online environments reinforce selective exposure. In this project, I focused on Emotion Homogeneity, which analyzes whether content consistently triggers similar emotional responses. This dimension allowed for quantifiable analysis of emotional clustering within algorithmically curated spaces.

Data Preparation

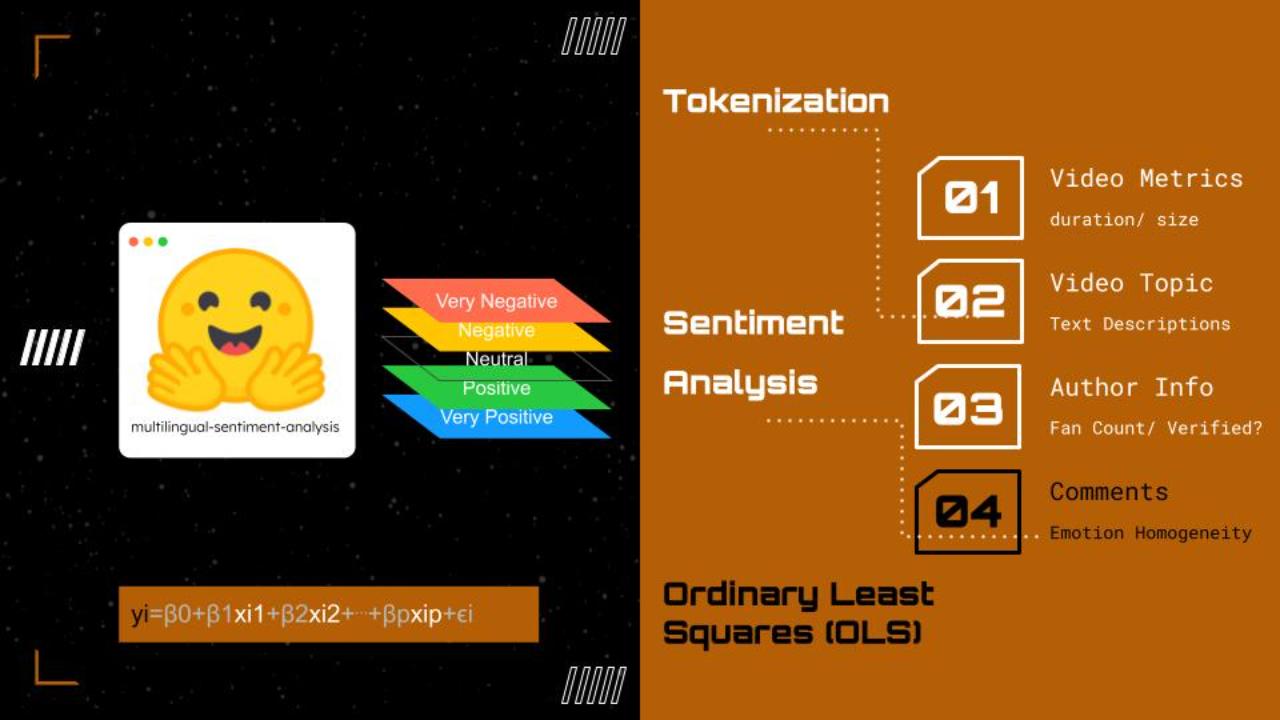

To begin the analysis, I scraped TikTok video pages using BeautifulSoup. From each post, I collected a range of data—including basic video metrics like duration, textual content such as captions and hashtags, and author information like fan count and verification status. But the most important layer was the comments section. That’s where I focused my analysis of emotion homogeneity—looking at whether users responded with similar emotional tones, which could indicate the presence of an emotional echo chamber. This scraping process allowed me to turn a qualitative feed into structured data for further analysis.

After collecting the data, I used a multilingual sentiment analysis model to classify each comment into five levels: from very negative to very positive. Before this, I applied tokenization to break down the text into analyzable units. The goal here was to measure emotion homogeneity—whether users in the same post responded with similar emotional tones.

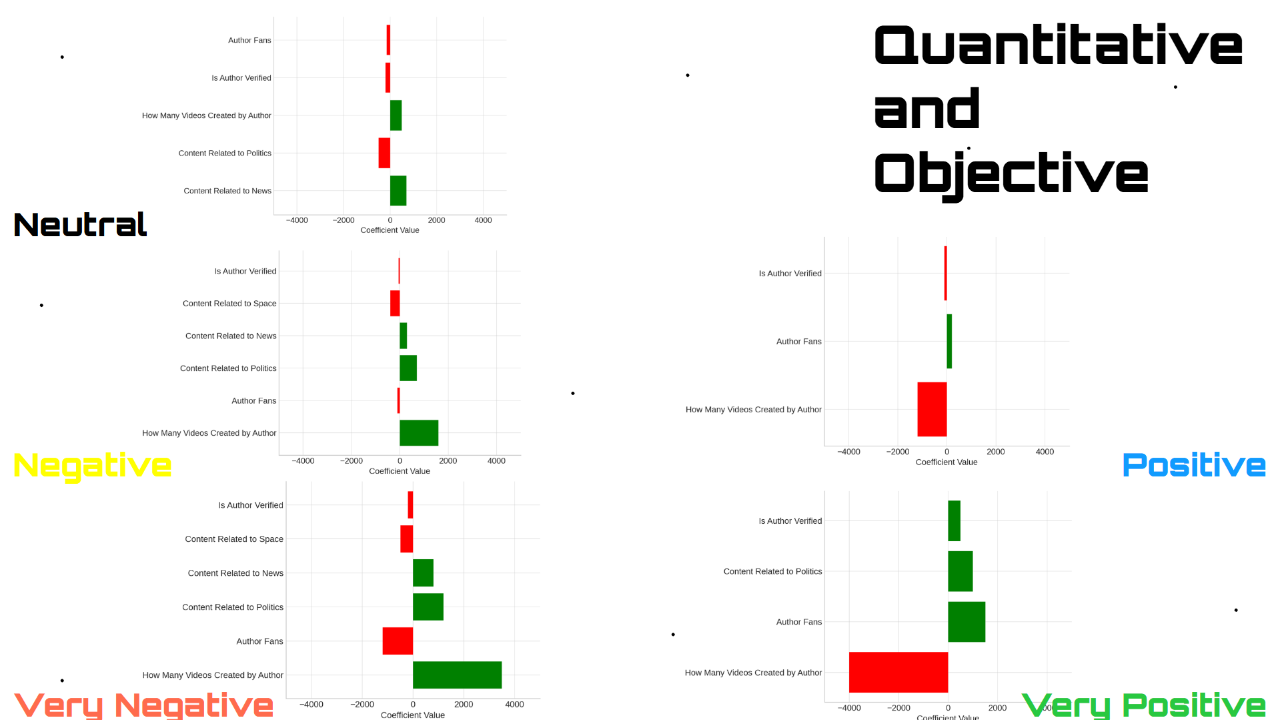

I then combined this emotional data with other features, like video metrics, topics, and author characteristics, and used Ordinary Least Squares (OLS) regression to explore how these variables influenced emotional responses. This helped quantify how emotional clustering happens across different types of content and creators.

Game Prototype

Before building the 3D game, I created a 2D prototype in p5.js, where white dots represent social media users and colored bubbles represent emotional filters—users get pulled in by emotional gravity. I tested three different topics—Work, Entertainment, and Social Issues—and this form of data visualization made me wonder: what if we could explore data playfully by gamifying it?

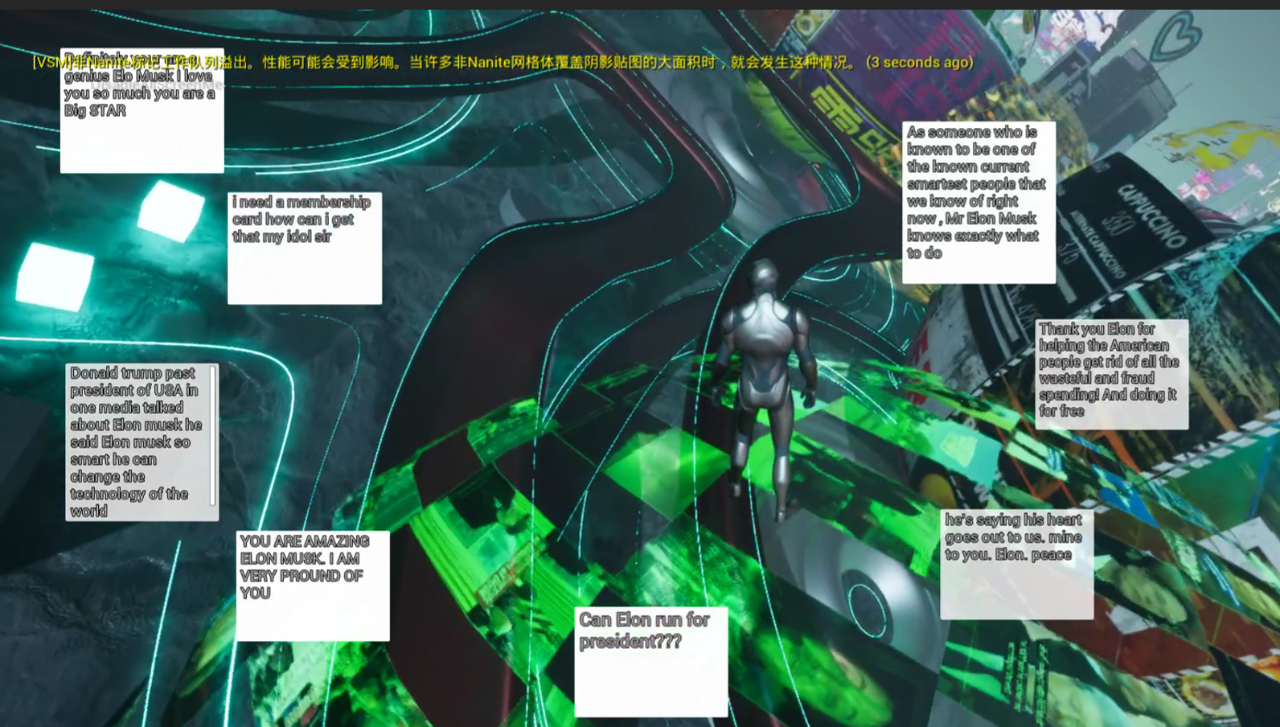

This project reimagines data visualization as a playable experience, allowing users to explore emotional data from the inside. The same framework could be adapted to other topics—for instance, this demo uses TikTok reactions to Elon Musk as gravitational fields, turning viral content into emotional singularities.

This project reimagines data visualization as a playable experience, allowing users to explore emotional data from the inside. The same framework could be adapted to other topics—for instance, this demo uses TikTok reactions to Elon Musk as gravitational fields, turning viral content into emotional singularities.

Game Design

EmotionEcho.exe was developed using Unreal Engine 5 with a combination of real comment datasets, gravity-based physics simulation, and sentiment classification. Each “bubble” in the game represents an emotional node, whose properties—like size, pull, and color—are mapped to data-derived values (e.g., sentiment score, homogeneity index). By making data feel physical, the game challenges how we emotionally and spatially experience digital platforms.

EmotionEcho.exe was developed using Unreal Engine 5 with a combination of real comment datasets, gravity-based physics simulation, and sentiment classification. Each “bubble” in the game represents an emotional node, whose properties—like size, pull, and color—are mapped to data-derived values (e.g., sentiment score, homogeneity index). By making data feel physical, the game challenges how we emotionally and spatially experience digital platforms.

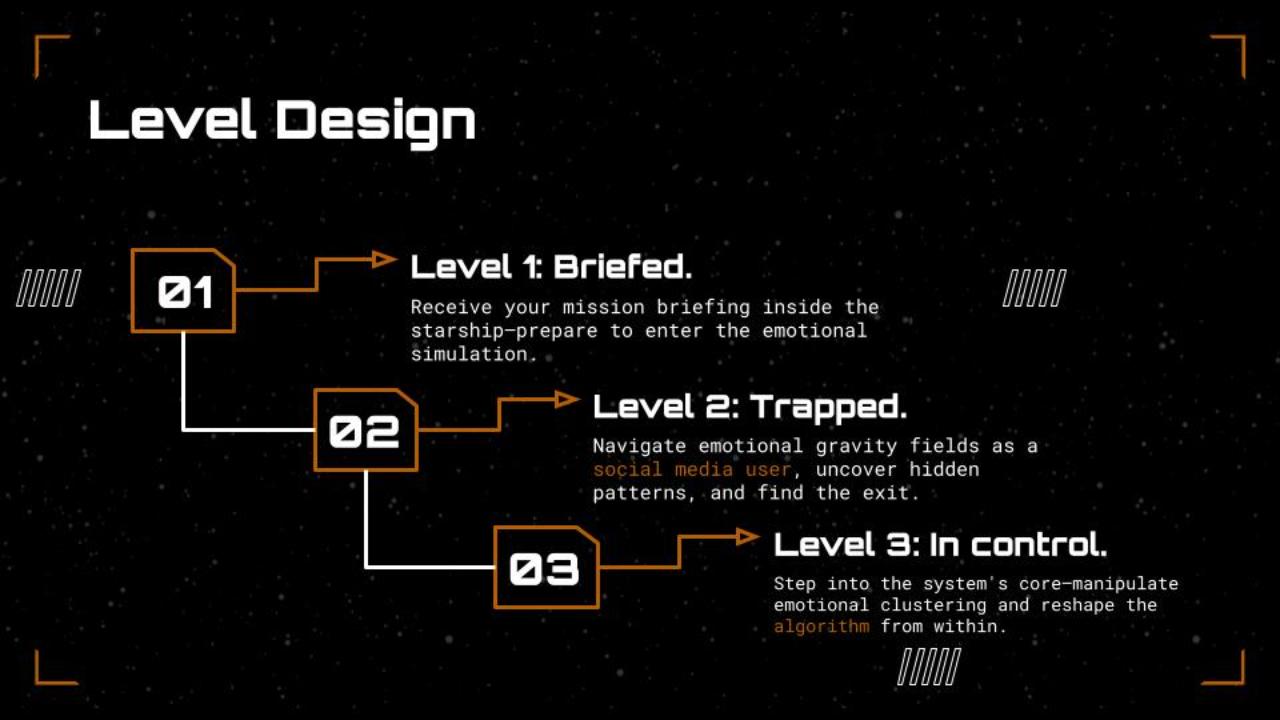

Level 1 — Storytelling Tutorial

The experience begins with a narrated, ambient tutorial space where players drift through emotional fragments. These fragments are drawn from real online comments, algorithmically clustered and embedded in floating bubbles. The goal is not to escape, but to observe: how emotion spreads, accumulates, and creates feedback loops in seemingly neutral environments.

Level 2 — Emotional Gravity

Once immersed, players navigate a distorted emotional terrain shaped by algorithmic influence. Each floating bubble represents a viral post, pulling the player with a gravity proportional to the emotional homogeneity of its comment section. High emotional agreement leads to stronger pull—positive or negative. This is where the player begins to feel trapped, looping in the same affective orbit.

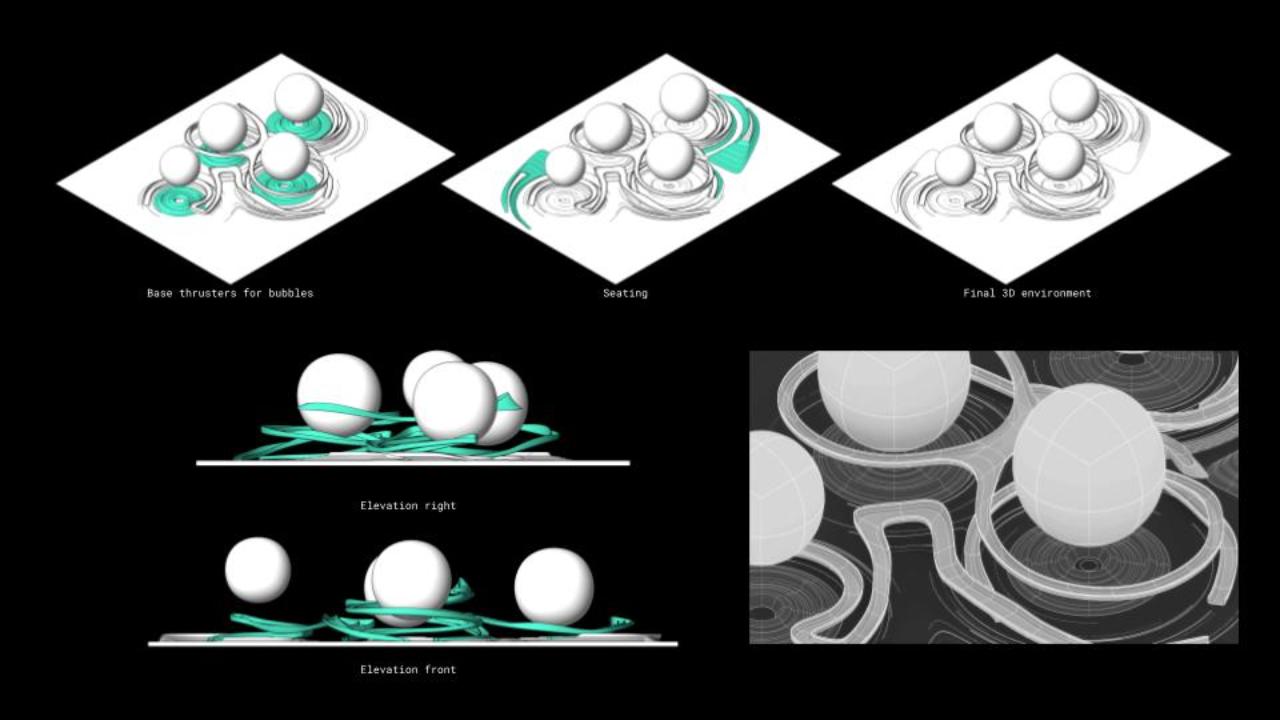

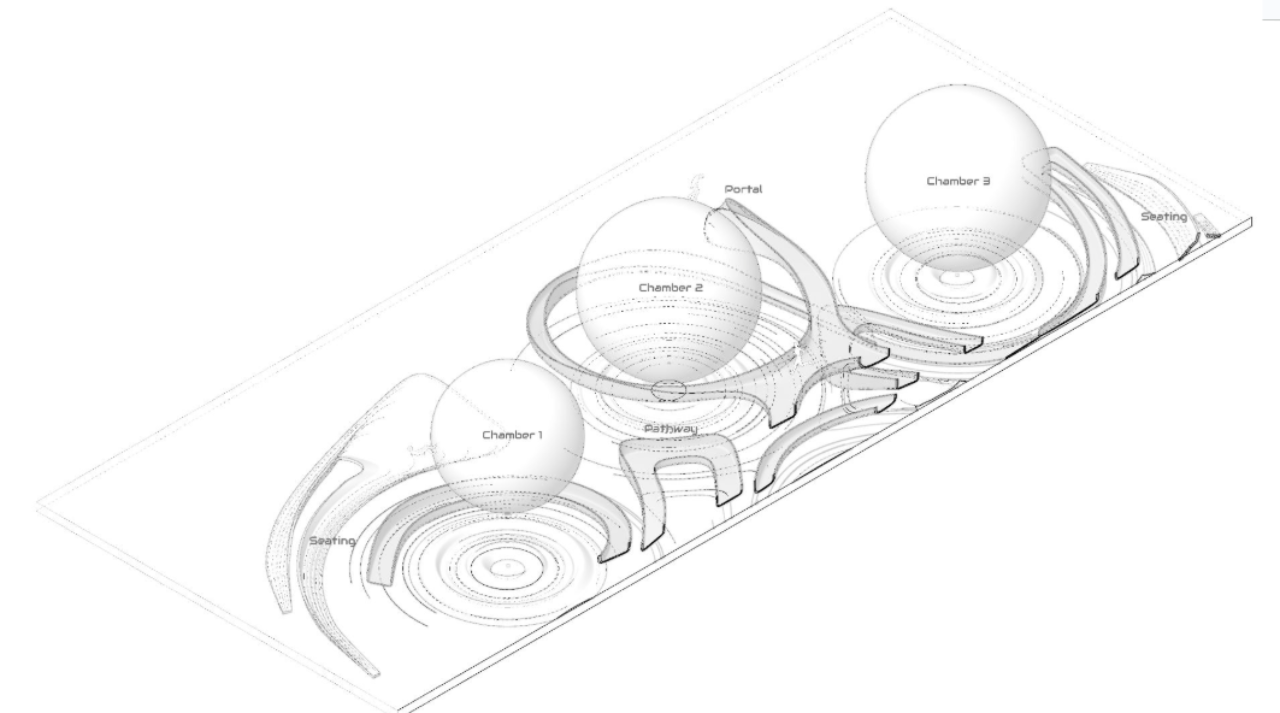

I collaborated with Manas Bhatia for environment design. To construct the environment, we began by placing discrete points in space—each representing the origin of an echo chamber or ideological bubble. These acted as repulsive forces, symbolizing the desire to move away from isolated thinking. In contrast, the spaces between them became zones of attraction, forming a magnetic-like field of positive forces. Drawing inspiration from the behavior of magnetic fields, we used Rhino and Grasshopper to generate field lines within these neutral zones. These lines served as guides for designing connective pathways that bridge the divide between bubbles. Recognizing the need for spatial complexity and accessibility, we introduced vertical variation by elevating portions of the pathways, enhancing both navigability and immersive experience.

I collaborated with Manas Bhatia for environment design. To construct the environment, we began by placing discrete points in space—each representing the origin of an echo chamber or ideological bubble. These acted as repulsive forces, symbolizing the desire to move away from isolated thinking. In contrast, the spaces between them became zones of attraction, forming a magnetic-like field of positive forces. Drawing inspiration from the behavior of magnetic fields, we used Rhino and Grasshopper to generate field lines within these neutral zones. These lines served as guides for designing connective pathways that bridge the divide between bubbles. Recognizing the need for spatial complexity and accessibility, we introduced vertical variation by elevating portions of the pathways, enhancing both navigability and immersive experience.

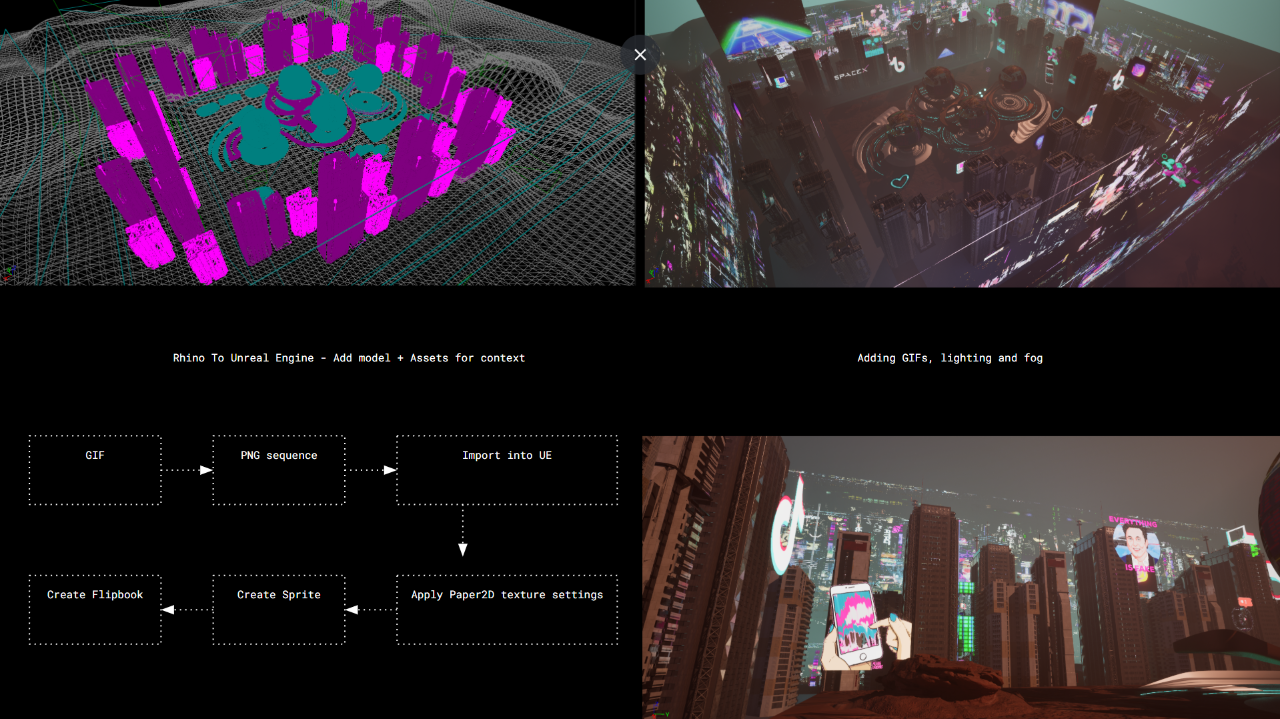

The Rhino model was exported using Datasmith plugin and imported into Unreal Engine for further development. Within Unreal, we sculpted the landscape, integrated contextual 3D building assets, applied materials, and adjusted the atmospheric conditions through fog and lighting effects. To enhance immersion, we incorporated animated flipbooks using GIFs and positioned images of cyberpunk cities behind the buildings, adding visual depth and a layered narrative to the environment.

The Rhino model was exported using Datasmith plugin and imported into Unreal Engine for further development. Within Unreal, we sculpted the landscape, integrated contextual 3D building assets, applied materials, and adjusted the atmospheric conditions through fog and lighting effects. To enhance immersion, we incorporated animated flipbooks using GIFs and positioned images of cyberpunk cities behind the buildings, adding visual depth and a layered narrative to the environment.

Level 3 — Control Room

Finally, players enter a space that mirrors the social media algorithm itself—a control interface where they can manipulate emotional parameters, observe bubble behavior, and trace how algorithmic curation shapes the emotional spectrum of their feed. This room blends quantitative data with subjective emotional responses, completing the circle from passive observer to active participant in the system.

Game Mechanics Walkthrough

The game unfolds within a defined bounding box that contains all emotional bubbles, generated at the start of each session based on input data. Each bubble represents a distinct emotion, visualized through color, with its size and movement governed by the intensity of the data it embodies. Surrounding each bubble is a collision field that influences the player-controlled character—when the avatar nears a bubble, it is pulled toward it, simulating emotional attraction or entrapment through a shift in gravity direction. Sliders dynamically adjust emotional parameters in real time, reshaping the behavior and scale of corresponding bubbles. Throughout the environment, interactive elements such as learning points trigger prompts upon collision, while props activate UI overlays like sliders and real-time surveillance footage. Teleport nodes shift the character’s position within the world, allowing traversal between spatial zones and emotional states.

Data Analysis

Reflection

Through layered design, EmotionEcho.exe proposes a shift in how we interact with online emotion—not as a passive byproduct of scrolling, but as a space we move through, shape, and are shaped by. It’s a speculative tool, a critical toy, and a visualization of the architectures we often inhabit without seeing.

Google Slides

Credits

Concept, design, and development by Skylar (Siqi) Zhang

Advised by Seth Thompson, GSAPP

Built using Unreal Engine 5

References

-

Birds of a Feather: How TV Fandoms End Up as Twitter Echo Chambers

-

Social Media Echo Chambers: Public Health Implications — Published on PubMed Central

Special Thanks

This project would not have been possible without the guidance, teaching, and support from the following people:

Course Instructors

- Computational Design Workflows – Celeste Layne

- Methods as Practices – William Martin and Violet Whiteney

- Explore, Explain, Propose – Laura Kurgan and Snoweria Zhang

- Design in Action – Catherine Griffiths and Seth Thompson

- Virtual Architecture – Nitzan Bartov

- Spatial AI – William Martin

Collaborators & Friends

- Junyin Chen and Rui Wang (Southeast University), whose early conversations helped spark the foundation of this inquiry

- Manas Bhatia, Yilin Zheng, and Catherine Ye (Columbia University), for their continuous support, feedback, and camaraderie throughout the process

Advisor

A special thank you to Seth Thompson, whose mentorship, encouragement, and critical insight guided this project from concept to execution. His role as an advisor has been instrumental in shaping both the vision and the rigor of this work.