Archtalk — Spatial Semantics

John Masataka Xianfeng Jiang

What if you could model a building

just by describing it in words?

How can AI support design?

Today, AI is everywhere—in design, in creation, in construction.

We all know what prompts are. We’ve seen AI generate cat memes, floor plans, poems, and pizza recipes.

But can AI help design?

Can it create new tools?

Yes, and more.

Then, what about humans?

I believe humans are the orchestrators.

We build the systems.

We connect the ideas.

We set the stage, hand the baton to AI, and ask it to play along.

To connect AI, design, and tools—especially in the world of Building Information Modeling (BIM)—we need one thing above all:

Structured data.

Without structured data, AI and design tools can't speak the same language. They're like brilliant minds stuck in a noisy room, unable to hear each other.

But how do we design the right structure?

Some might ask: why not just use IFC?

Sure, IFC is the international standard for building data exchange.

But let’s be honest—it's heavy, complex, and often unreadable by AI.

It records information, not design intent.

AI needs intent. AI needs clarity.

So I designed a new structured data schema using JSON.

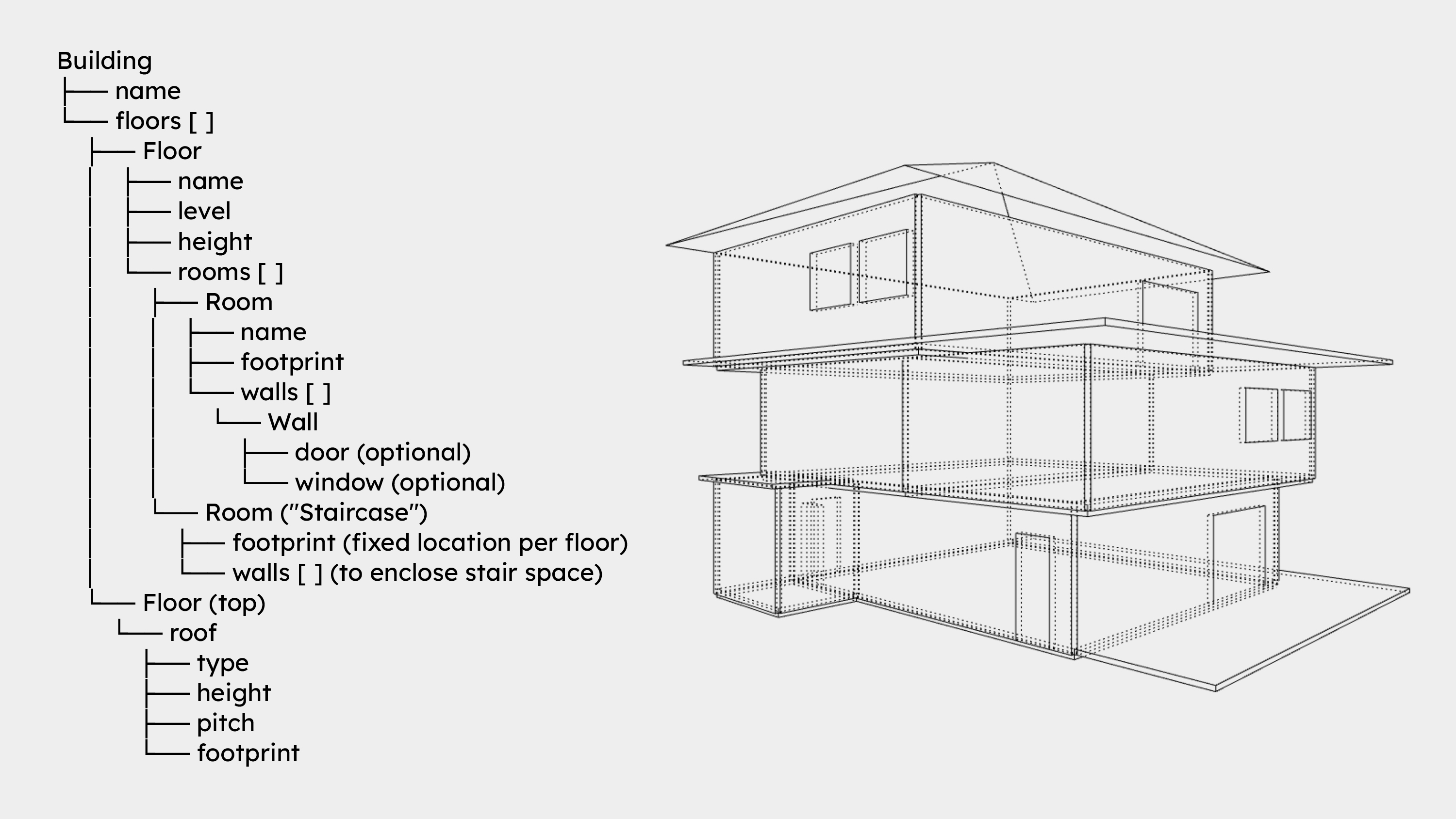

It starts with a building. Inside the building, there are floors.

Floors contain rooms. Rooms have walls. Walls have doors and windows.

Every element has properties—dimensions, materials, positions.

This parent-child structure helps AI understand relationships, hierarchies, and logic.

Using this data, AI can generate and modify architecture—based only on natural language prompts.

“This bedroom is insanely short—my head keeps hitting the wall!

Can we extend it, please?”

“This window is weird. It’s between the bedroom and the kitchen.

Who puts a window there? It’s ridiculous.”

“Hey—I don’t need anyone to build a house for me.

It’s the 21st century. I’ve got tools, I’ve got rights, and I’ve got a voice.”

Using this methodology, we can construct countless buildings—each with its own shape, material, and logic.

And all of them begin with language.

What do you think?

Ready to dive into something more advanced?

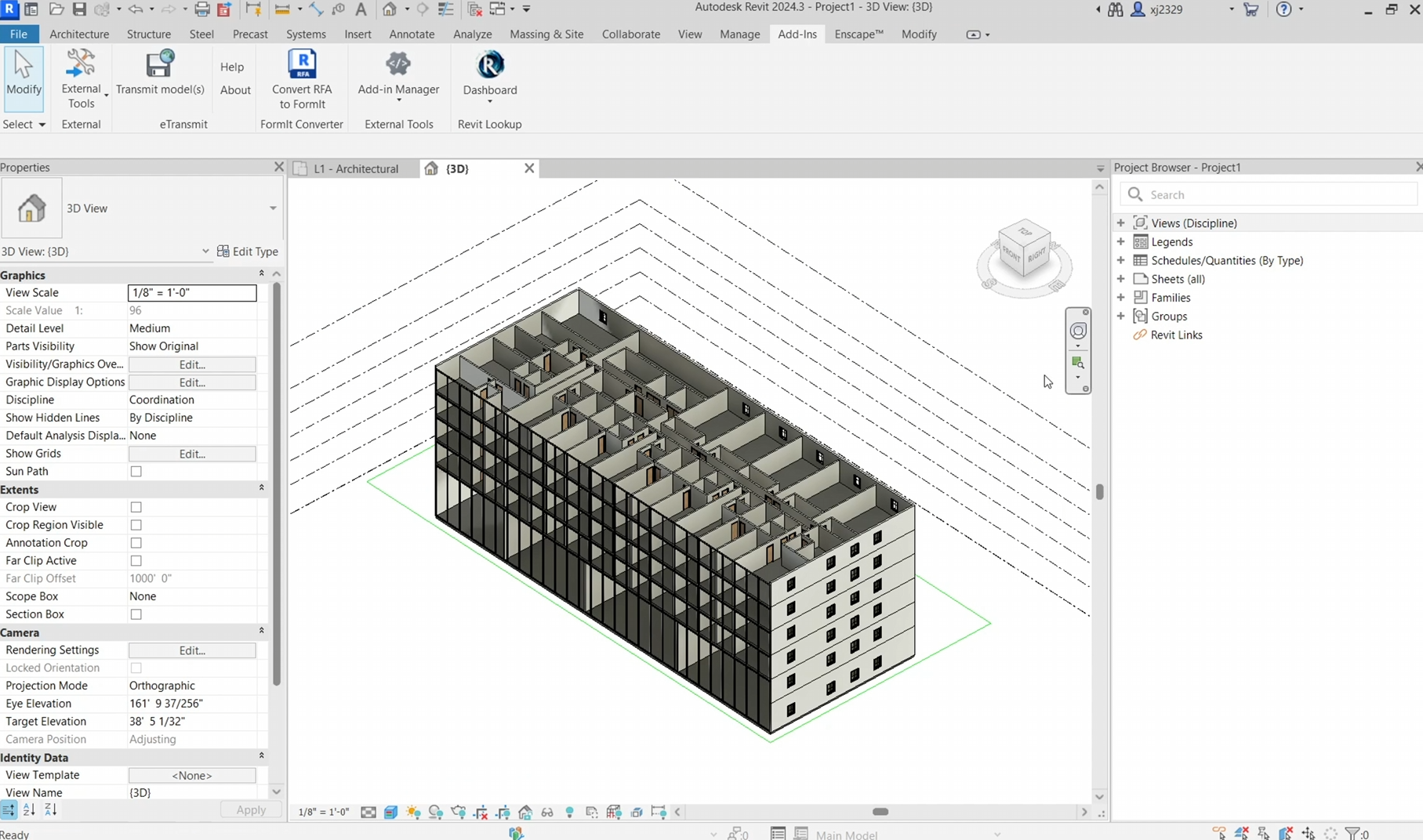

Archtalk is an interactive web application for architectural design and visualization.

It integrates real-time 3D modeling with AI-powered conversational assistance, enabling users to design and modify building structures intuitively.

But Archtalk is not a product—it’s a proof of concept.

It’s a validation of a new methodology.

At its core, Archtalk employs a JSON-based data structure to represent architectural elements.

When users make requests through the chat interface, the OpenAI API interprets the prompt, updates the JSON model, and instantly refreshes the 3D visualization.

By combining architectural tools with natural language interaction, Archtalk lowers the barrier to spatial design—making the process more accessible, adaptive, and playful.

What I learned from this project:

- Investigated data structures for bridging AI models with design tools

- Developed and tested conversational design workflows with LLMs using React

- Gained hands-on experience in full-stack development

So, can we answer these questions?

- Can this methodology be applied to other platforms like Autodesk tools?

- Can AI optimize building designs based on energy use, materials, and spatial logic? What trade-offs are involved?

- Can a user’s design history be used to generate personalized recommendations?

- How can real-time data improve decisions around costs, scheduling, and environmental impact?

- What would a truly collaborative, real-time design platform look like? How might multiple users co-create, annotate, and iterate together?

A sentence can become a structure.

A phrase can define a room.

A conversation can shape a city.

A phrase can define a room. A conversation can shape a city.

The future of design may not begin with blueprints, but with words.

Typed. Spoken. Dreamed. Interpreted.

And if language can build a house, what else can it build?

The possibilities are as infinite as imagination—and now, as accessible as a chat box.

Welcome to the next chapter of architectural thinking.

Let’s keep talking.

Acknowledgments

This project would not have been possible without the generous guidance and support I received throughout my academic journey.

I feel especially grateful to have taken part in such inspiring classes—not only in the MSCDP program and at GSAPP, but also across Columbia University, including the Journalism, Engineering, and Business Schools. These courses meaningfully contributed to this research. Listed below are a few that had a particularly strong influence:

By semester and in alphabetical order:

-

Celeste Layne — Computational Design Workflows

-

Mario Giampieri — Mapping Systems

-

Meli Harvey and Luc Wilson — Computational Modeling

-

Violet Whiteney and William Martin — Methods As Practices

-

Alex Barrett — Design by Development: Underwriting and Analysis

-

Brian Borowski — Data Structures in Java

-

Chok Lei — Financial Modeling Case Studies

-

Danil Nagy — Design Intelligence

-

Jia Zhang — Data Visualization For Architecture, Urbanism, and the Humainties

-

Jonathan Weiner — The Written Word

-

Joseph Brennan — Re-Thinking BIM

-

Laura Kurgan and Snoweria Zhang — Explore, Explain, Propose

-

Nakul Verma — Machine Learning

-

Tomasz Piskorski — Real Estate Finance

-

Catherine Griffiths and Seth Thompson — Design in Action

-

Charlie Stewart — Project Management: From Idea to Execution

-

Danil Nagy — Generative Design

-

Jonathan Stiles — Exploring Urban Data with Machine Learning

-

Lydia Chilton and Aimee Oh — User Interface Design

-

Michael Vahrenwald — Architectural Photography: From the Models to the Built World

-

William Martin — Spatial AI

This project is not just the result of code and design—it is the result of conversation, collaboration, and care.

About the Author

John Masataka Xianfeng Jiang, a New York and Tokyo-based architect and AI researcher. Formerly a project manager at Kengo Kuma & Associates (KKAA), he is also a member of the Architectural Institute of Japan (AIJ). Notable completed works include Endo at the Rotunda (London), Aman Miami Beach (Florida), Brooktree House (Los Angeles), and Alberni Tower (Vancouver).

References

[1] B. Poole, A. Jain, J. T. Barron, B. Mildenhall, R. Ramamoorthi, and P. Hedman, "DreamFusion: Text-to-3D using 2D Diffusion," arXiv preprint arXiv:2209.14988, 2022.

[2] C. Lin, R. Hu, J. Gao, and Y. Wang, "Magic3D: High-Resolution Text-to-3D Content Creation," arXiv preprint arXiv:2211.10440, 2022.

[3] H. Zhang, F. Liu, X. Wang, and S. Chen, "Instant3D: Fast Text-to-3D Generation with Sparse-View Image Reconstruction," arXiv preprint arXiv:2311.08403, 2023.

[4] J. Li, S. Wu, M. Zhao, and X. Liu, "Dual3D: Efficient and Consistent Text-to-3D Generation with Dual-Mode Multi-View Latent Diffusion," arXiv preprint arXiv:2405.09874, 2024.

[5] Y. Chen, T. Wang, and Z. Lin, "3DTopia: Large Text-to-3D Generation Model with Hybrid Diffusion Priors," arXiv preprint arXiv:2403.02234, 2024.

[6] Meta AI Research, "Meta 3D Gen: Physically-Based 3D Asset Generation from Text," arXiv preprint arXiv:2402.05856, 2024.

[7] K. Saito, H. Tanaka, and M. Yamada, "Text-to-3D using Gaussian Splatting," arXiv preprint arXiv:2309.16585, 2023.